What will you learn in this section?

- Overview of the Bagging Model

Bagging trains multiple weak learners on different samples of training data. It utilizes multiple homogeneous models

(mostly decision trees) trained on subsets of the training data rather than on the entire dataset.

Weak learners in bagging are deep trees that tend to overfit individually. They have low bias and high

variance. Bagging helps mitigate overfitting by aggregating predictions from multiple models.

Typically, bagging involves a large number of models (50-200), which is a hyperparameter that needs tuning. All weak

learners are independent of each other and are trained in parallel.

To ensure that weak learners capture diverse patterns, they should be uncorrelated. This is achieved by training each model

on a different subset of the data.

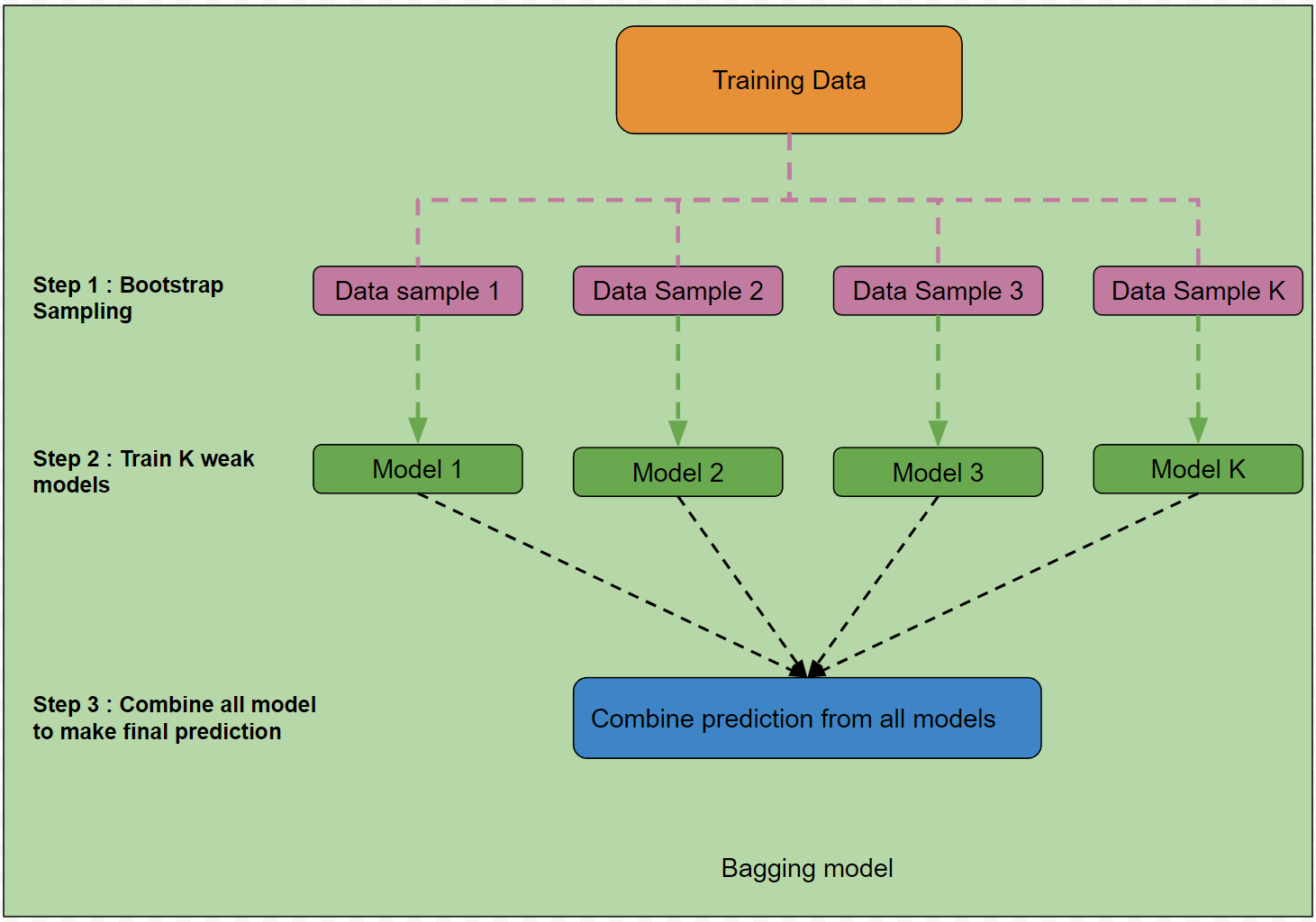

There are three major steps involved in bagging, as illustrated in Diagram 1:

-

Bootstrap Sampling

Bagging employs this technique to create random samples of training data. It involves random sampling with replacement. For each weak learner, a random sample is generated from the original data, and the model is trained on the newly created bootstrap sample.

-

Train Weak Models

Bagging trains homogeneous weak learners, typically decision trees (though other models can be used). To ensure diversity, bootstrap sampling is applied. These weak learners tend to overfit on the training samples, but bagging mitigates this by aggregating multiple weak learners.

-

Combine Models for Final Prediction

For classification problems, the majority voting technique is used, while for regression, predictions are averaged.

Additionally, weighted majority voting (for classification) and weighted averaging (for regression) can be applied, where weights are determined based on each model’s average error. Models with higher errors contribute less to the final prediction.

Random Forest is an example of a bagging algorithm. However, it is implemented slightly differently from the method discussed above. For more details, check Random Forest.

Diagram 1: Brief Overview of the Bagging Model